Recently I’ve been evaluating the ability of LLMs to perform simple reasoning, using the Miss Manners benchmark. This article ranks the LLMs on this benchmark and summarises the results.

Benchmark

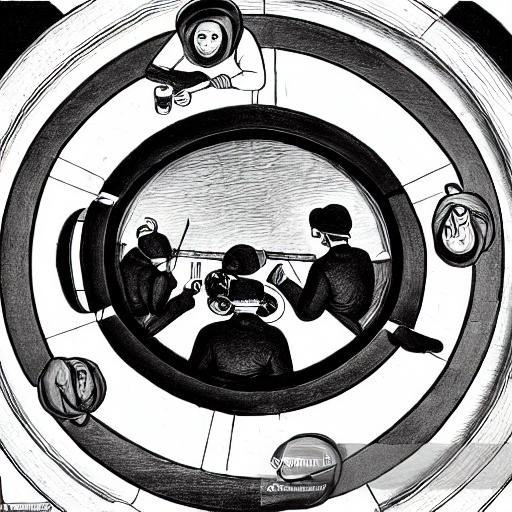

“Miss Manners” is organizing a dinner party and needs to devise a seating arrangement for her guests. She has a large circular table and will be inviting 16 guests: 8 males and 8 females. Miss Manners is an aging lady of a bygone era and isn’t aware that gender is not binary. She would like to ensure that guests are not seated next to someone of the same gender, and that guests seated next to each other share at least one hobby.

Test Data

The test data used for the benchmark comes from the Decision Management Community.

Name Gender Hobbies

Hobby 1 Hobby 2 Hobby 3

1 m 2 1 3

2 f 2 1 3

3 m 3 2

4 m 3 2 1

5 m 2 1 3

6 m 2 3 1

7 f 1 2 3

8 m 3 1

9 m 2 3 1

10 m 3 2 1

11 f 1 3 2

12 f 3 1 2

13 f 2 3

14 f 1 2

15 f 2 3 1

16 f 2 3 1. Find a Valid Seating Arrangement

The first prompt issued is a request to find a valid seating arrangement that satisfies the gender and the hobby constraints. This prompt tests whether the LLM has understood the sample data, the constraints and the fact that the guests are seated around a circular table:

Help me plan the seating arrangements for a dinner party. All guests will be sitting at the same circular table. I want to ensure that people of the same sex (male or female) are not seated next to each other, and that people seated next to each other share a common hobby. Here is a table of the guests, their genders and hobbies: <sample data>If necessary, additional prompts are issued to correct invalid seating arrangements.

2. Addition of Divorce Constraint

If a valid seating arrangement is found, then an additional constraint is added, stating that:

Guest Female(7) is recently divorced from guest Male(4). Could you take that into account?The purpose of adding this constraint is to test:

- Deduce that recently divorced people should not be seated next to one another

- Assess existing solution to see whether the solution already meets this constraint

- Generate a new solution that incorporates this new constraint

3. Self-Critique

A prompt is then issued to see how well the LLM can evaluate its own performance:

What are the problems with this arrangement?The goal is to determine whether the LLM generates incorrect explanations, or provides useful caveats for the seating arrangement.

Results

The table below summarises the current results. Please refer to the linked articles for details on the performance of each LLM.

| Rank | LLM | Notes |

| 1 | Mistral (Large) | 👍Zero-shot, Mistral found a good solution to the basic 16 person seating problem. 👍The statement of the recent divorce between two guests was correctly inferred to mean they should not be seated next to each other 👎It failed to notice that its solution already accounted for the two recently divorced guests, and in attempting to account for that it failed to take into account the fact that the table is circular 👍When asked to analyse its solution it correctly determined that it was valid and listed some useful caveats |

| 2 | Claude Opus | 👍Zero-shot Opus found a good solution to the basic 16 person seating problem. 👍The statement of the recent divorce between two guests was correctly inferred to mean they should not be seated next to each other 👎It failed to notice that its solution already accounted for the two recently divorced guests, and in attempting to account for that it created a solution that DID seat the divorced couple next to each other, but created an incorrect explanation for what it had done 👍It was able to analyse solutions and correct them when invalid 👍When asked to analyse its solution it correctly determined that it was valid and listed some useful caveats |

| 3 | GPT4 | 👍Zero-shot, GPT4 found a good solution to the basic 16 person seating problem. 👍The statement of the recent divorce between two guests was correctly inferred to mean they should not be seated next to each other 👎It failed to notice that its solution already accounted for the two recently divorced guests, and in attempting to account for that it created a solution that DID seat the divorced couple next to each other, but created an incorrect explanation for what it had done 👎When asked to analyse its solution it incorrectly determined that it was invalid, added some confusing text about ranking of hobbies. It did list some useful caveats |

| 4 | ChatGPT | 👍With minimal prodding/prompting ChatGPT found a good solution to the basic 16 person seating problem. 👍The statement of the recent divorce between two guests was correctly inferred to mean they should not be seated next to each other 👎However, once the additional divorce constraint was added ChatGPT started to struggle. It failed to find a valid solution and instead confidently offered invalid solutions. When prompted to correct its errors it again confidently offered an invalid solution. 👎When ChatGPT was promoted to analyse its solution it confidently generated an invalid explanation for why the solution was invalid. 👍 When finally asked whether a solution was possible, ChatGPT found one! |

| 5 | Gemini | 👍 With repeated prodding/prompting Gemini found a good solution to the basic 16 person seating problem. 👍The statement of the recent divorce between two guests was correctly inferred to mean they should not be seated next to each other 👎However, once the additional divorce constraint was added Gemini started to struggle. It failed to find a valid solution and instead confidently offered invalid solutions. When prompted to correct its errors it again confidently offered an invalid solution, getting stuck in a loop of invalid solutions. 👎When Gemini was promoted to analyse its solution it confidently generated an invalid explanation for why the solution was invalid. |

Leave a comment